Digital Foundry has released an in-depth look at the upscaling tech that’ll be included with Intel’s upcoming Arc GPUs and compared its performance to Nvidia’s offering, Deep Learning Super Sampling (DLSS). Based on the tests they’ve run so far, Intel’s Xe Super Sampling, or XeSS for short, seems to do a reasonable job of holding its own against more mature technologies — though it’s worth noting that Digital Foundry only ran tests with Intel’s highest-end card, the Arc A770, and mostly in a single game, Shadow of the Tomb Raider.

The idea behind XeSS and other technologies like it is to run your game at a lower resolution, then use a bunch of machine learning algorithms to upscale it in a way that looks way better than more basic upscaling methods. In practice, this lets you run games at higher frame rates or turn on fancy effects like ray tracing without giving up a huge amount of performance because your GPU is actually rendering fewer pixels and then upscaling the resulting image, often using dedicated hardware. For example, according to Digital Foundry, if you have a 1080p display, XeSS will run the game at 960 x 540 in its highest performance (AKA highest FPS mode) and at 720p on its “Quality” mode before then upscaling it to your monitor’s native resolution. If you want more details on how exactly it does this, I recommend checking out Digital Foundry’s write-up on Eurogamer.

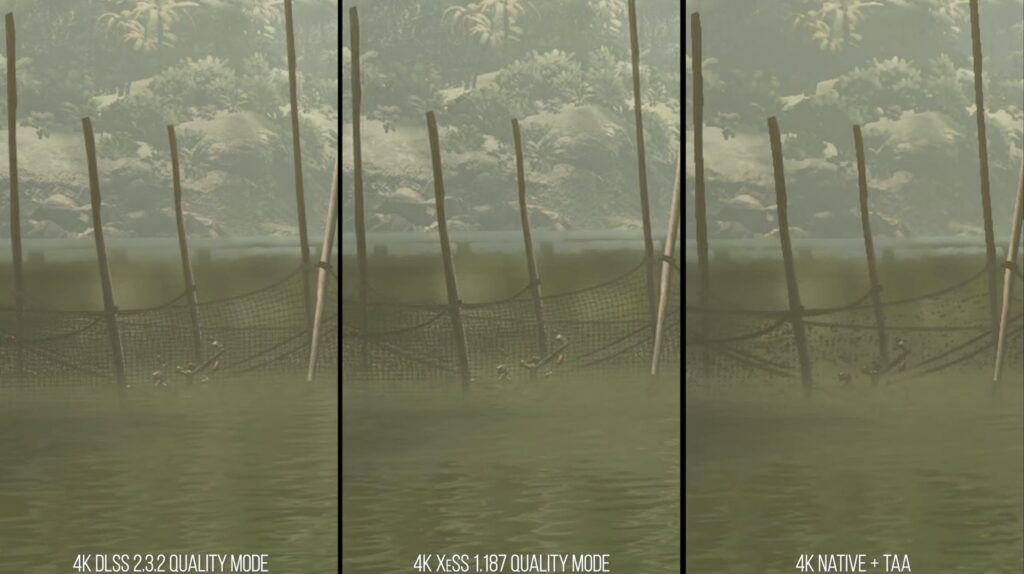

In Digital Foundry’s tests, XeSS did this task pretty well when running on the Arc A770 (the tech will be usable on other, non-Xe graphics cards as well, including the ones integrated into Intel’s processors and even on Nvidia’s cards). It provided a solid bump in frame rates compared to running the game in native 1080p or 4K, and there wasn’t a huge drop in quality like you’d expect to see without any sort of upscaling. Put side-by-side with the results from Nvidia’s DLSS, which is more or less the gold standard for AI-powered upscaling at this point, XeSS was able to retain a comparable amount of sharpness and details in a lot of areas, such as foliage, character models, and backgrounds.

Digital Foundry found that XeSS added two to four milliseconds to frame times or the amount of time a frame was displayed on the screen before being replaced. That could, in theory, make the game feel less responsive, but in practice, the fact that you’re getting more FPS helps even things out a bit.

With that said, XeSS had a few hiccups that either weren’t present or were noticeably less intense when using DLSS. Intel’s tech particularly struggled with thin details, sometimes showing flickering moiré patterns or bands. These types of artifacts could definitely be distracting depending on where they showed up, and they got worse as Digital Foundry pushed the system, asking it to upscale lower and lower resolution images to 1080p or 4K (something you might have to do with especially demanding games). Nvidia’s tech wasn’t totally immune to these issues, especially in modes that focused more on performance than image quality, but they certainly seemed less prevalent. XeSS also added some extremely noticeable jittering effects to water and some less intense ghosting to certain models when they were moving.

Intel also struggled to keep up with Nvidia when it came to a few particular subjects — notably, DLSS handled Lara Croft’s hair significantly better than XeSS. There were one or two times when the results from XeSS looked better to my eyes, though, so your mileage may vary.

XeSS is still obviously in its early stages, and specs about the Arc GPUs it’ll mainly be assisting are just starting to come out. That makes it hard to tell how it’ll perform on Intel’s lower-end desktop lineup and on the laptop graphics cards that have been around for a few months. It’s also worth noting that, as with DLSS, XeSS won’t work with every game — so far, Intel’s listed around 21 games that will support XeSS, compared to the approximately 200 titles that DLSS works with (though the company does say it’s collaborating with “many game studios” like Codemasters and Ubisoft to get the tech into more games).

Still, it’s nice to at least get a taste of how it will work and to know that it’s, at the very least, competent. Without naming names, other first tries at this sort of tech haven’t necessarily held up to DLSS as well as XeSS has. While we still don’t know whether Intel’s GPUs are actually going to be any good (especially compared to the upcoming RTX 40-series and RDNA 3 GPUs from AMD, which has its own upscaling tech called FSR), it’s good to know that at least one aspect of them is a success. And if Intel’s cards do end up being bad for gaming, XeSS may actually be able to help with that — it’s the small wins, really.

Update September 14th 7:30PM ET: updated to note that Intel has announced more games supporting XeSS than are currently listed on its website.