Argonne National Laboratory, US Department of Energy

Scientists at the U.S. Department of Energy’s Argonne National Laboratory recently used the second-fasted supercomputer in the world to create the biggest, most complicated simulation of the universe ever. The Frontier Supercomputer, based in the Oak Ridge National Laboratory (ORNL), was the fasted in the world until it was surpassed by El Capitan at the Lawrence Livermore National Laboratory in Livermore, California in November 2024.

According to Live Science, “Frontier used a software platform called the Hardware/Hybrid Accelerated Cosmology Code (HACC) as part of ExaSky, a project that formed part of the U.S. Department of Energy’s (DOE) $1.8 billion Exascale Computing Project.”

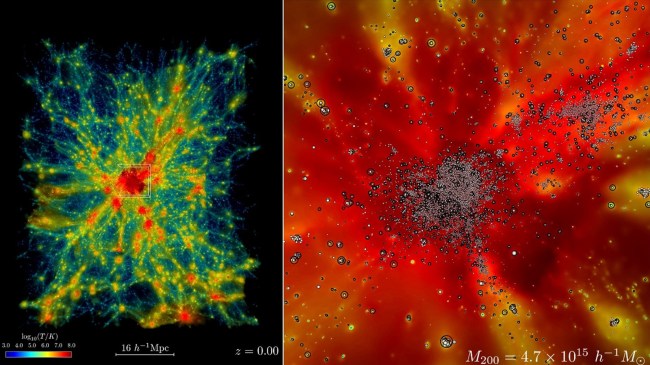

Frontier’s astrophysical simulation covers a volume of the universe that is 10 billion light years across. It incorporates detailed physics models for dark matter, dark energy, gas dynamics, star formation, and black hole growth. This, according to Universe Today, will be extremely useful in determining how galaxies form and how the large-scale structure of the universe evolves.

“There are two components in the universe: dark matter — which as far as we know, only interacts gravitationally — and conventional matter, or atomic matter,” said project lead Salman Habib, division director for Computational Sciences at Argonne.

“So, if we want to know what the universe is up to, we need to simulate both of these things: gravity as well as all the other physics including hot gas, and the formation of stars, black holes and galaxies. The astrophysical ‘kitchen sink’ so to speak. These simulations are what we call cosmological hydrodynamics simulations.”

Creating the largest, most complicated simulation of the universe ever required a lot of energy – 21 MW of electricity, or enough to power about 15,000 single-family homes in the United States. The scientists believe it was worth it though.

“For example, if we were to simulate a large chunk of the universe surveyed by one of the big telescopes such as the Rubin Observatory in Chile, you’re talking about looking at huge chunks of time — billions of years of expansion,” said Habib. “Until recently, we couldn’t even imagine doing such a large simulation like that except in the gravity-only approximation.”

“It’s not only the sheer size of the physical domain, which is necessary to make direct comparison to modern survey observations enabled by exascale computing,” said Bronson Messer, Oak Ridge Leadership Computing Facility director of science. “It’s also the added physical realism of including the baryons and all the other dynamic physics that makes this simulation a true tour de force for Frontier.”

Any computer capable of more than 999 petaFLOPS (0.9 exaFLOPS) is classified as an “exascale” supercomputer. Frontier can reach 1.4 exaFLOPS of power. El Capitan holds the record at 1.7 exaFLOPS.

Frontier has also been used for other research like simulating an entire year of global climate data, creating new substrates and geometries, modeling chemical interaction predict material behavior, modeling diseases, developing new drugs and better batteries, finding new materials for energy storage, and eventually, the simulation of a living cell. However, it is how exascale computing can supercharge artificial intelligence (AI) that really has scientists excited, according to Live Science.