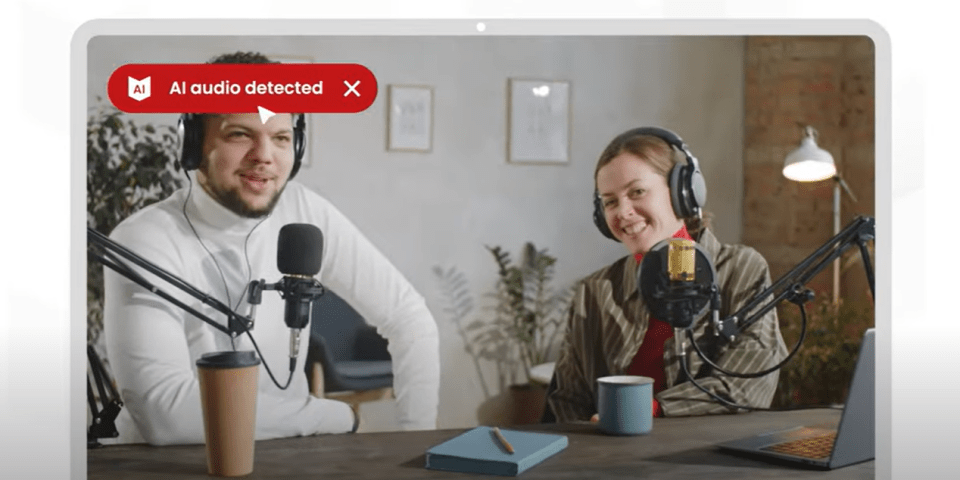

A CLEVER new tool can sniff out a deepfake video you might be watching online, and alert you that the clip may have been digitally manipulated.

The criminal industry in cyberspace has moved from malware driven attacks, such as password theft, to social engineering scams that utilise AI.

Software that can recreate digital audio and video clones, known as deepfakes, has grown increasingly sophisticated in the past year.

Experts have previously told The Sun that deepfakes are the “biggest evolving threat” when it comes to cybercrime.

But the Deepfake Detector, recently unveiled by McAfee, is one of the first consumer-focused tools that can alert you of the threat before it fools you completely.

It is an automated tool that scans the videos you watch online in real-time, and sends you a pop-up notification alerting you to the likelihood of that clip including AI generated audio.

McAfee says it can detect AI manipulated content with a 96 per cent success rate, which the company states will only improve as the tool gets smarter.

“Scams are becoming harder to identify as generative AI removes the traditional hallmarks of misspelled words and poor grammar,” Oliver Devane, Senior Security Researcher at McAfee, told The Sun.

“Since deepfakes can look so uncannily real, they make it harder to trust what you watch or listen to online.

“Scammers make use of high-profile people such as celebrities and politicians to gain the trust of their targets as they can make you more vulnerable to scams and disinformation as they can add credibility to scams.”

The Deepfake Detector won’t explicitly tell you that what you’re watching is dangerous or a scam – because it might not be.

YouTubers and influencers often use AI to help create audio for legitimate videos.

It’s up to you to distinguish whether you can trust the video you’re watching or not, such as if it is giving too-good-to-be-true investment advice.

The tool doesn’t yet detect if the video itself has been digitally altered, and it’s not yet available on smartphones where consumers arguably do most of their video watching.

Deepfake voices are often more trusted than images as most people are more forgiving for bad quality video, but their suspicions are raised if the person’s voice doesn’t sound quite right, which is why we started with audio.

Oliver Devane, Senior Security Researcher at McAfee

That being said, McAfee had to start somewhere, according to Devane, who pivoted his focus onto AI last year after nearly two decades in malware research.

“Deepfake voices are often more trusted than images as most people are more forgiving for bad quality video, but their suspicions are raised if the person’s voice doesn’t sound quite right, which is why we started with audio,” he said.

“Scammers also pull on emotions and try to catch people when their guard is down, for example a phone call out of the blue from a loved one in distress is more likely to make someone believe the deepfake is actually them.”

Generative AI can make threats “much more lethal” without having to put any extra work in, Devane said at a recent briefing unveiling the new product.

That’s why deepfake defence tools like McAfee’s will only grow more intelligent, and more common in the wider cybersecurity industry.

The only downside is that most people can’t buy it yet.

McAfee’s Deepfake Detector is only available on hardware, like Lenovo’s new line of laptops, as well as an upcoming line of Asus devices.

What are the arguments against AI?

Artificial intelligence is a highly contested issue, and it seems everyone has a stance on it. Here are some common arguments against it:

Loss of jobs – Some industry experts argue that AI will create new niches in the job market, and as some roles are eliminated, others will appear. However, many artists and writers insist the argument is ethical, as generative AI tools are being trained on their work and wouldn’t function otherwise.

Ethics – When AI is trained on a dataset, much of the content is taken from the Internet. This is almost always, if not exclusively, done without notifying the people whose work is being taken.

Privacy – Content from personal social media accounts may be fed to language models to train them. Concerns have cropped up as Meta unveils its AI assistants across platforms like Facebook and Instagram. There have been legal challenges to this: in 2016, legislation was created to protect personal data in the EU, and similar laws are in the works in the United States.

Misinformation – As AI tools pulls information from the Internet, they may take things out of context or suffer hallucinations that produce nonsensical answers. Tools like Copilot on Bing and Google’s generative AI in search are always at risk of getting things wrong. Some critics argue this could have lethal effects – such as AI prescribing the wrong health information.