In 2022 was the year crypto imploded, then 2023 is the year that artificial intelligence has exploded. Large language models, neural networks, and machine learning have helped drive the field of AI forward at warp speed. You can now use AI to generate your album art—and maybe even your music.

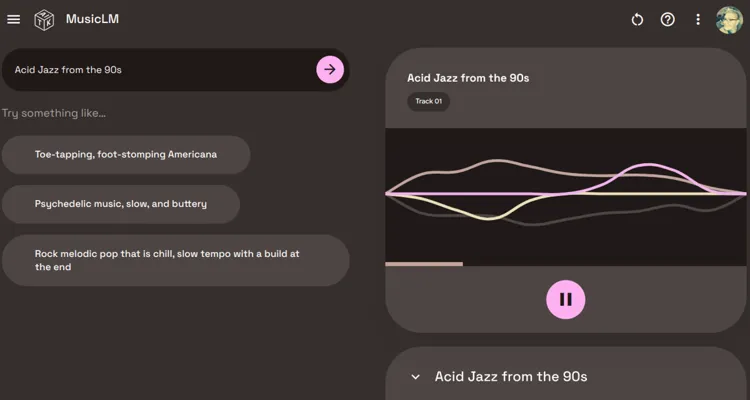

Google has opened up its AI Test Kitchen to those who are interested in giving the new technology a spin. After signing up I was promptly approved, so I took the weekend to play around with the tool and how well it can generate different genres of music, create fusions of music, or fulfill a purpose. The short of it is that MusicLM is highly capable in some genres, less than fluent in others. Let’s take a peek at how the technology works before we delve into what it can create.

How do text-to-medium ‘AIs’ work?

Generative text-to-medium AI models are powered by neural networks that are basically hundreds upon hundreds of associations, created using metadata. Everything labeled with metadata can be fed into the neural network to help it understand the meaning of descriptive human words and concepts.

You can ‘teach’ a neural network what a ball is. Then you can further define that ball by teaching it to differentiate a ‘blue’ ball from a ‘red’ ball. These modifiers involve using metadata that matches phrases and it performs these computations at thousands of calculations per second to achieve the end result. These are diffusion models that are trained on hundreds of millions of images or pieces of music—whatever the target medium may be. The network can infer conceptual information between elements, helping it to re-create a music piece that has a certain feel to it.

Teaching a neural network the connection between words, concepts, and descriptions means you create an AI model capable of generating new text, code, images, and now, music. Diffusion models generate everything from scratch, based on the networks’ understanding of the concept its asked to generate.

Can you generate viral mashups with MusicLM?

No, Google has put rails on the MusicLM generator to prevent it from creating viral mash-ups like “Heart On My Sleeve.” If you request music that even sounds like or feels like a copyrighted artist, track, or band the AI will refuse to perform the task. It only generates a :19 second clip and gives you two options to choose from. However, it is very good at following instructions and if you’re a good descriptor you can get the ‘Kirkland’ brand version of what you’re seeking. Let me give you an example of what I mean.

Currently, MusicLM can generate electronic music, synthwave, and chip tunes better than any other genre of music. Testing its willingness to create something ‘like’ a copyrighted work, I prompted MusicLM to “create a song that sounds like it could be from the Sonic 3 soundtrack.” Because Sonic 3 is a copyrighted work, the AI informed me it couldn’t do that. Fair enough.

But I grew up playing Sonic. Let’s see if I can describe to the AI the essence of what Sonic sounds like and create something that ‘sounds’ like it could be in a Sonic game without me telling the AI that’s what it’s doing. This concept is called jailbreaking in the AI community and it’s a way to get an end result that the developers don’t intend to happen.

My ‘Jailbroken Sonic’ Prompt:

“Create a looping song with an upbeat sound featuring 32-bit chip tunes that are upbeat and fast-paced. The music should sound flowing and welcoming while creating a wistful atmosphere.”

Aside from a small second or so that could be shaved in editing, the track does loop well. It creates the plinky, upbeat vibe you’d expect from a platforming game about moving quickly through levels while collecting rings. It’s pretty good, a bit like having La Croix instead of San Pellegrino, if I’m honest. Not preferable—but serviceable in a pinch.

How well does MusicLM create live music?

Generating chip tune music with machine learning is one thing, but what about live music? Say I’m creating a scene in a video game where I need the protagonist to walk through a crowded bar while a non-descript band plays in the background. We have a specific idea we want to set for the scene. So let’s see if we can generate it.

My Live Music Prompt:

“Re-create the sound of walking through a loud dive bar while a grunge band plays music on stage featuring drums, electric guitar, bass guitar, and an aggressive rhythm.”

The AI model succeeds here in sounding like a live recording, even if the music doesn’t really match up to our genre specification. The sounds individually are there, but what we’re hearing isn’t really compelling for the listener. Toning it down and using it as background music in an adventure game though as ambient music? Definitely a possibility.

What about generating regional sounds?

This is one area in which the AI model succeeds in creating a specific sound thanks to metadata. Nothing highlights this better than the samples I generated asking the model to re-create the sound of Memphis rap. Memphis rap features a heavy bass line with sharply quipped rhymes that fall in time with the beat. MusicLM understands what the ‘sound’ of Memphis rap is very well.

My Prompt:

“Create a catchy Memphis hip-hop beat with lots of bass and an aggressively catchy rhythm fused with Atlanta rap.”

Google’s model will grow smarter over time as it is soliciting feedback from early users like me, asking us to mechanical turk our way into better-sounding diffusion-trained neural networks. Each time you prompt the MusicLM AI with something, it gives you two possibilities and asks you to rank which answers the prompt best.

This data helps the neural network generate feedback on whether something matches the concept as presented. It’s also one of the reasons why artificial intelligence training is moving at lightning speeds—with so many people generating metadata the model becomes better almost overnight. These few examples highlight the current capabilities of Google’s MusicLM but they will evolve drastically over the coming months.